NeROIC: Neural Rendering of Objects from Online Image Collections

-

Zhengfei Kuang

University of Southern California -

Kyle Olszewski

Snap Inc. -

Menglei Chai

Snap Inc. -

Zeng Huang

Snap Inc. -

Panos Achlioptas

Snap Inc. -

Sergey Tulyakov

Snap Inc.

Abstract

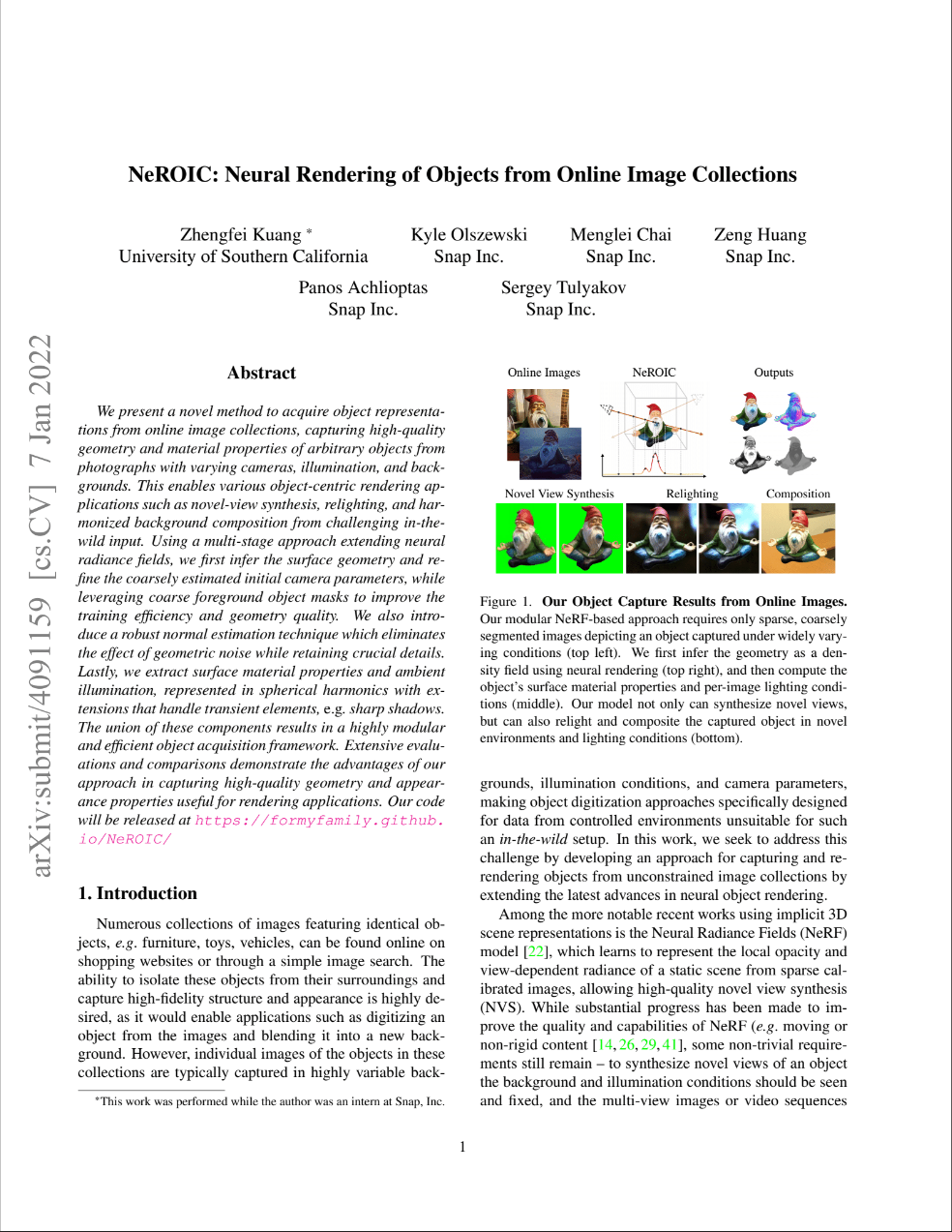

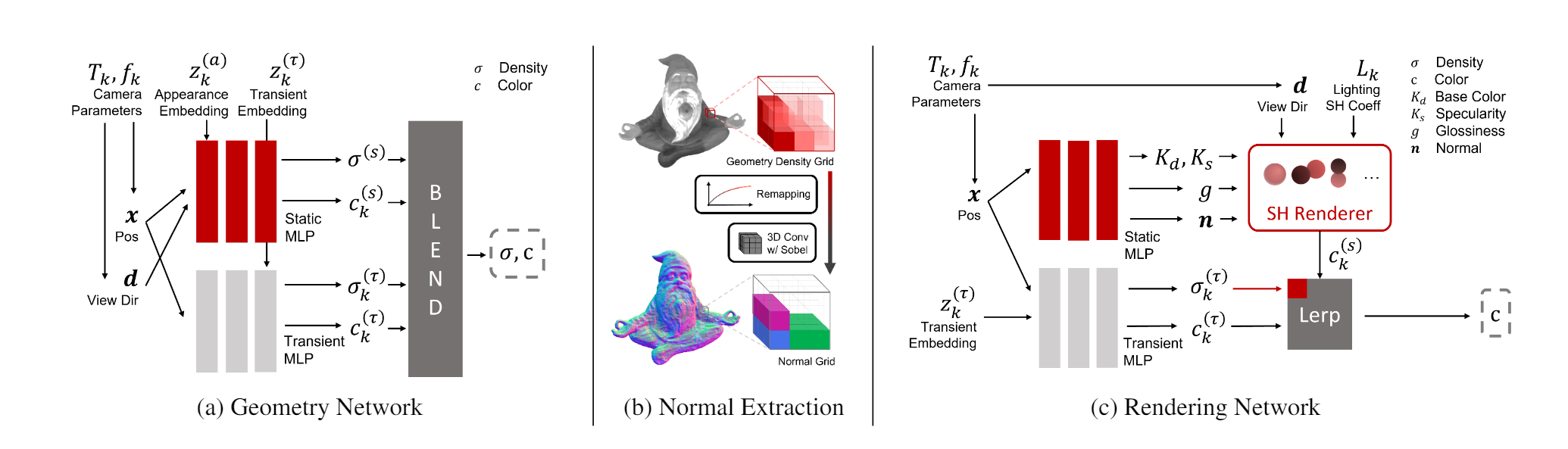

We present a novel method to acquire object representations from online image collections, capturing high-quality geometry and material properties of arbitrary objects from photographs with varying cameras, illumination, and backgrounds. This enables various object-centric rendering applications such as novel-view synthesis, relighting, and harmonized background composition from challenging in-the-wild input. Using a multi-stage approach extending neural radiance fields, we first infer the surface geometry and refine the coarsely estimated initial camera parameters, while leveraging coarse foreground object masks to improve the training efficiency and geometry quality. We also introduce a robust normal estimation technique which eliminates the effect of geometric noise while retaining crucial details. Lastly, we extract surface material properties and ambient illumination, represented in spherical harmonics with extensions that handle transient elements, e.g. sharp shadows. The union of these components results in a highly modular and efficient object acquisition framework. Extensive evaluations and comparisons demonstrate the advantages of our approach in capturing high-quality geometry and appearance properties useful for rendering applications.

Video

Overview

Our two-stage model takes images of an object from different conditions as input. With the camera poses of images and object foreground masks acquired by other state-of-the-art methods, we first optimize the geometry of scanned object and refine camera poses by training a NeRF-based network; We then compute the surface normal from the geometry (represented by density function) using our normal extraction layer; Finally, our second stage model decomposes the material properties of the object and solves for the lighting conditions for each image.

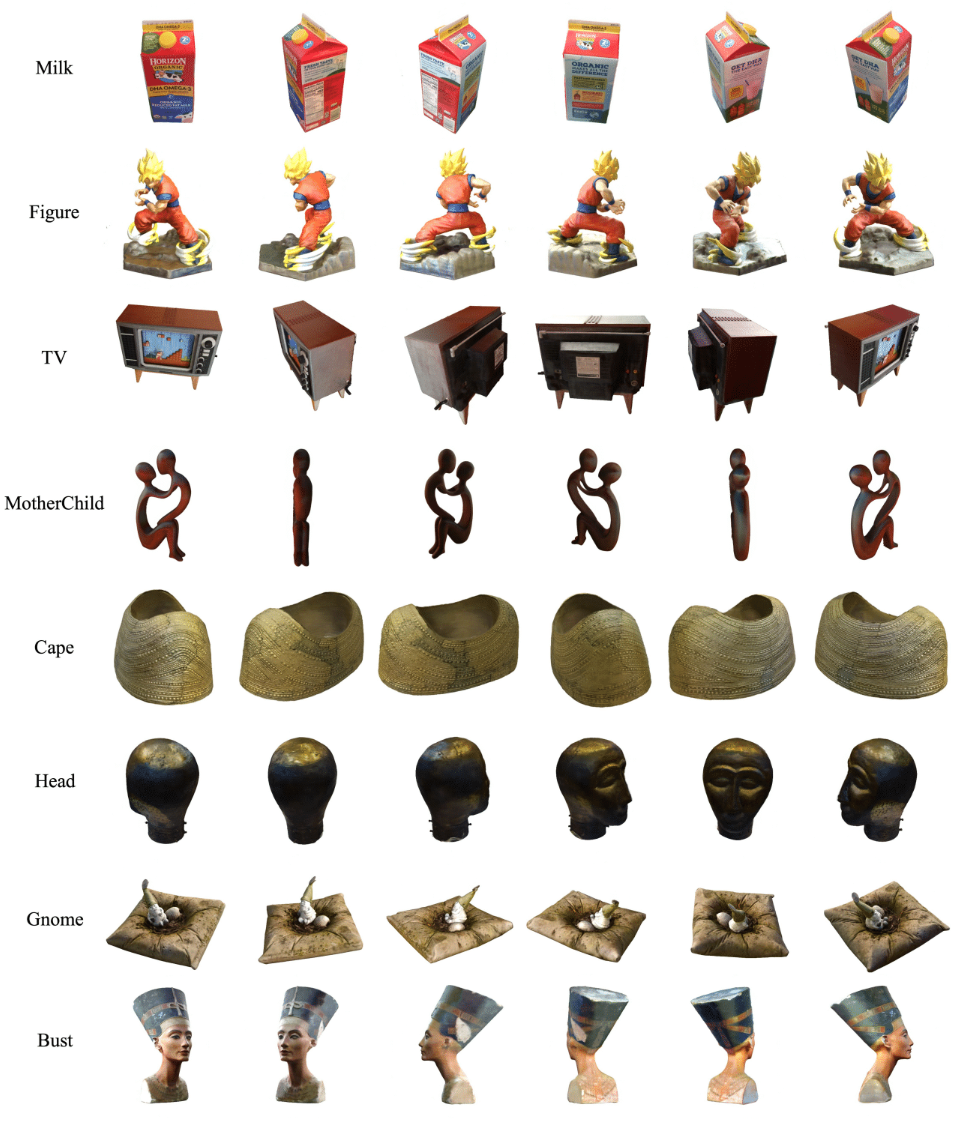

Novel View Synthesis

Given online images from a common object, our model can synthesize novel views of the object with the lighting conditions from the training images.

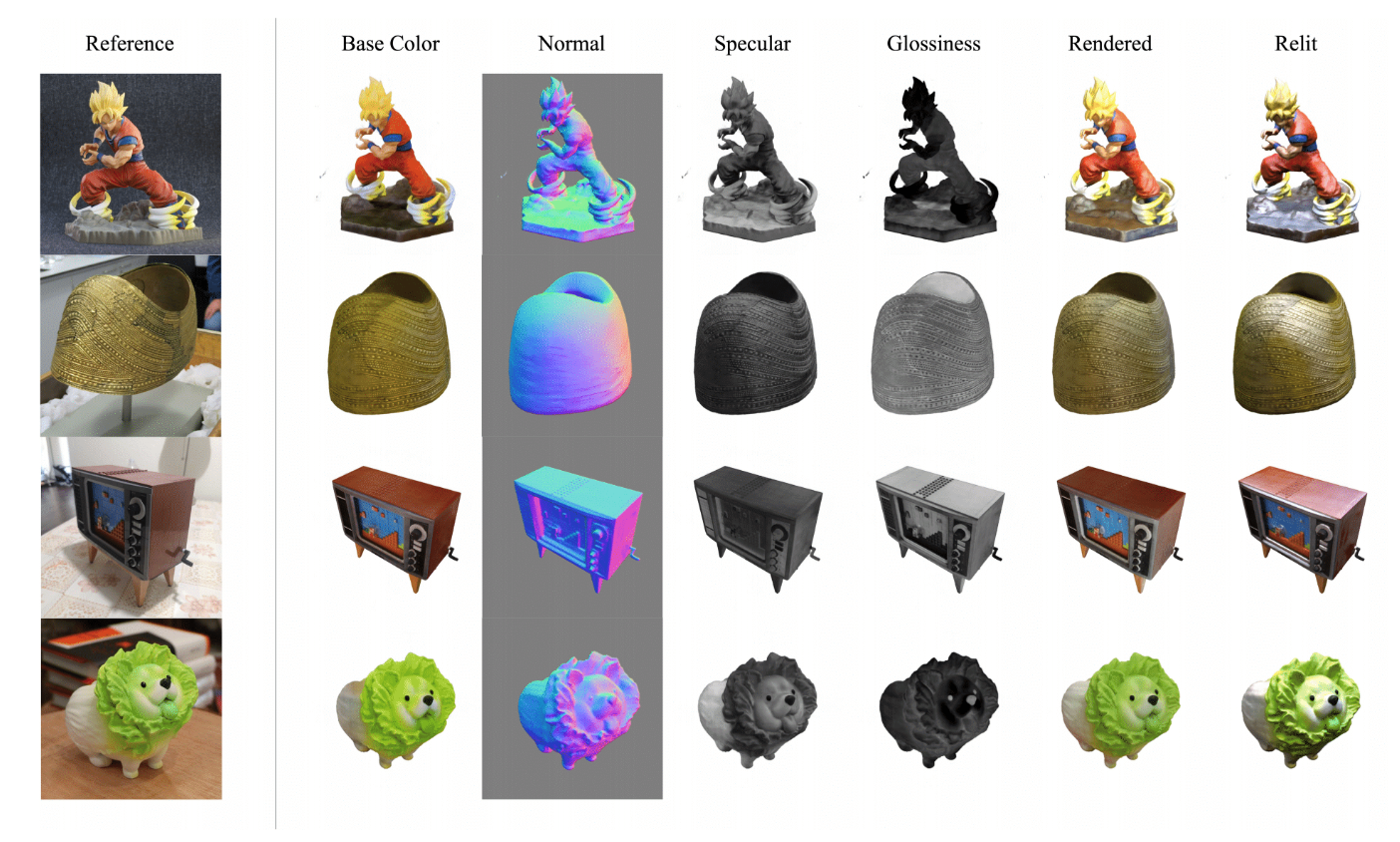

Material Decomposition

Our model also solves the material properties (including Albedo, Specularity and Roughness maps) and surface normal of the captured object.

Relighting

With the material properties and geometry generated from our model, we can further render the object with novel lighting environments.

Citation

@article{10.1145/3528223.3530177,

author = {Kuang, Zhengfei and Olszewski, Kyle and Chai, Menglei and Huang, Zeng and Achlioptas, Panos and Tulyakov, Sergey},

title = {NeROIC: Neural Rendering of Objects from Online Image Collections},

year = {2022},

issue_date = {July 2022},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

volume = {41},

number = {4},

issn = {0730-0301},

url = {https://doi.org/10.1145/3528223.3530177},

doi = {10.1145/3528223.3530177},

journal = {ACM Trans. Graph.},

month = {jul},

articleno = {56},

numpages = {12},

keywords = {neural rendering, reflectance & shading models, multi-view & 3D}

}The website template was borrowed from Michaël Gharbi.